Table of Contents

Introduction

VLA (Vision-Language-Action) models have shown promising results for robots to perform tasks in complex environments based on visual input and textual instructions. Specifically, chunk-based action generation is crucial for its long-horizon planning and short-term per-chunk smooth motion. However, naive chunk-by-chunk action execution strategy may result in jerky robot motion when switching chunks. In this blog, a predictive flow-matching-based action decoder design is proposed to generate smooth action sequences, which forms a key component of our lightweight VLA variant implementation.

(RoboGroove©)

Task: turn on the stove and put the moka pot on it

Method

VLA conceptually has three logical components: visual perception, language comprehension, and action generation. Current (as of 2025) SOTA VLA models typically employ either a dual-core system that consists of a VLM scene/task understander and an action generator, or an end-to-end general policy that directly maps observations to actions.

Here, we try to implement a lightweight VLA model of the first kind (i.e., a VLM + Expert architecture) for low-cost consumer devices (e.g., RTX3060). Additionally, we expect this VLA agent to output actions that lead to smooth motion as much as possible.

To achieve the first goal, we use the pretrained SmolVLM2 as the lightweight backbone for scene perception and task planning, which has about 0.35B parameters (with some layers removed from pretrained model). As for the second goal, TE(temporal ensemble) in ACT is a commonly used method to alleviate/circumvent this discontinuity issue between adjacent chunks, despite potentially outputting invalid actions. Here we propose a new smoothness-oriented action generation method, which has model parameters about 0.13B. To elaborate a bit, we adopt the following improvements:

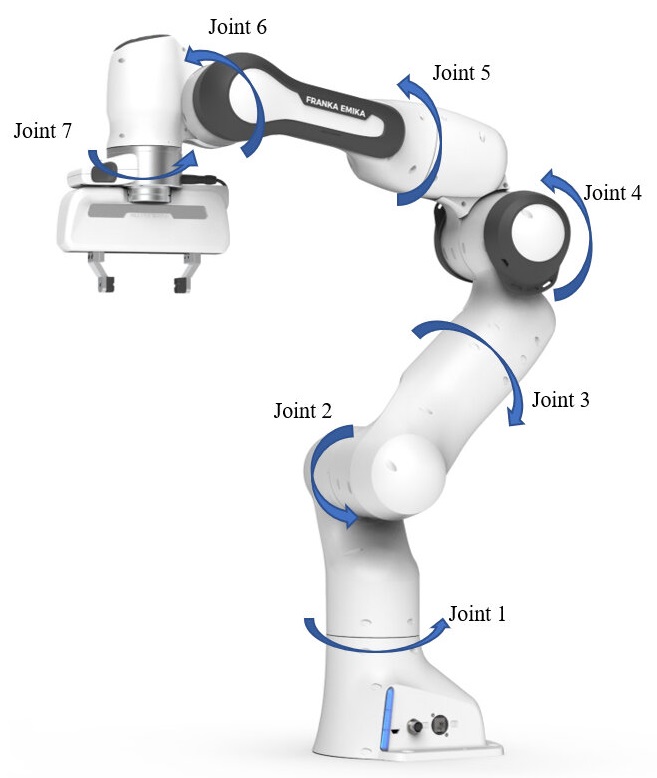

- new state feature representation: two perpendicular axes are used to represent the robot arm's attitude feature instead of the Rodrigues (AA) form.

- predictive action chunk generation: briefly speaking, we implement a 2D flow-matching based action expert with formula $p(A_{t} - A_{t-E} {\ }|{\ } O_{t}, A_{t-E})$ instead of $p(A_{t} {\ }|{\ } O_{t})$.

Experiments

| Task | |

|---|---|

| put the black bowl in the bottom drawer of the cabinet and close it | |

| put both moka pots on the stove | |

| put the wine bottle on top of the cabinet | |

| put the bowl on the plate | |

| put the wine bottle on the rack | |

| ... | TO-ADD-MORE |